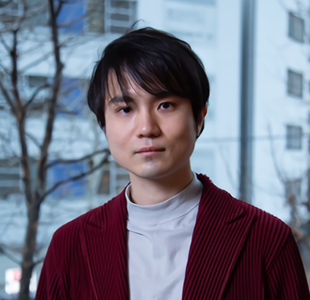

The CEO of Cluster, the largest metaverse platform in Japan, will explain the current state of the metaverse business and how to use it practically.

- Appearance of the metaverse market

- Transition of Cluster’s business

- Potential of the metaverse seen and understood from handling more than 100 projects yearly

- The reality of people and communities that make their home in the metaverse

He studied cosmology and quantum computing at the Faculty of Science of Kyoto University. After dropping out of graduate school, he spent about three years as a recluse. In 2015, he founded the VR technology start-up, “Cluster.” In 2017, he released “Cluster,” a VR platform that allows users to hold large-scale virtual events. It has now evolved into a metaverse platform that allows users to not only hold events but also to talk with friends in their favorite avatars and post online games to play. He was selected as one of the “30 Japanese under 30 who will change the world” by the business magazine “Forbes JAPAN.” He is the author of “Metaverse: Good-bye Atom’s Era.” (Shueisha/2022, Japan)

Rhizomatiks first became involved in the creation of artistic performances in 2005, and since 2010, have been active practitioners in the entertainment realm. Our work primarily revolves around the development and implementation of technologically-informed stage performances. In this presentation, I introduce behind the scenes of our performative work.

Graduated from Tokyo University of Science, Faculty of Science, Department of Mathematics and the International Academy of Media Arts and Sciences (IAMAS) in Gifu, Japan. Daito founded Rhizomatiks in 2006 after working as an adjunct instructor at the Tokyo National University of Fine Arts and Music. In 2016, Daito worked as the technical director and AR director for the Flag Handover Ceremony presented at the closing ceremony of the Rio Olympics.

Brain-Computer Interfaces (BCI) allow people to interact directly from their brain activity. These technologies fascinate and inspire many science fiction movies or books, where they are frequently presented as the future of our interactions in the real world but also in digital and virtual universes. In this presentation, we will review the possibilities they offer, in combination with virtual and augmented reality technologies. We will discuss the most promising application fields such as sports, medicine, training or entertainment. We will describe representative examples and impressive prototypes developed in our laboratory over the last years. Finally, we will list the main difficulties and remaining scientific challenges for BCI to be really considered as a viable alternative in extended reality.

Anatole Lécuyer is Director of Research and Head of Hybrid research team, at Inria, the French National Institute for Research in Computer Science and Control, in Rennes, France. His research interests include virtual reality, haptic interaction, 3D user interfaces, and brain-computer interfaces (BCI). He served as Associate Editor of "IEEE Transactions on Visualization and Computer Graphics", “Frontiers in Virtual Reality” and “Presence” journals, and Program Chair of IEEE Virtual Reality Conference (2015-2016). He is author or co-author of more than 200 scientific publications. Anatole Lécuyer obtained the Inria-French Academy of Sciences Young Researcher Prize in 2013, the IEEE VGTC Technical Achievement Award in Virtual/Augmented Reality in 2019, and was inducted in the inaugural class of the IEEE Virtual Reality Academy in 2022.

https://people.rennes.inria.fr/Anatole.Lecuyer/

The advent of technologies modifying ourselves in the virtual and even in the real environments allows us to change and expand the definition of “self” and “real world” dramatically. Then, how would our perception, behavior, and underlying neural activity change if removing the constraints on our body as a physical self and the surrounding environment? To address this novel question, our research group has been trying to elucidate the neural basis of bodily transformation. In this talk, we present our recent results about the embodiment of a robotic “sixth finger” that can be added to innate fingers and controlled independently of other body parts, showing how our perception, behavior, and neural activity change based on psychophysical and functional neuroimaging evidence. Given these findings, we would like to discuss how far humans flexibly accept a new self and environment.

Yoichi Miyawaki is a professor at the Graduate School of Informatics and Engineering, the University of Electro-Communications, Japan. After he got Ph.D. from the University of Tokyo in 2001, he joined RIKEN Brain Science Institute in 2001 and then ATR Computational Neuroscience Laboratories in 2005. He has directed his lab at the University of Electro-Communications since 2017. His major interest is neuroscience, particularly human neuroimaging such as functional magnetic resonance imaging (fMRI) and magnetoencephalography (MEG) and its data analysis using statistical machine learning.

http://www.cns.mi.uec.ac.jp/miyawaki/index.html

Virtual Reality & Telexistence technologies have been achieved to create and transfer our perceptions and behaviors over distance,

and today these technologies are starting to be deployed in our society.

In this talk, the speaker will introduce his research activities in the KMD Embodied Media project, where they aim to enhance and connect human embodied experiences based on their haptics technologies,

and the recently started "Project Cybernetic being" under the Japanese research initiative called Moonshot, which aims to develop technologies that enable people

to realize their infinite abilities to the fullest, and share their diverse skills and experiences with others over the digitalized network.

After receiving his PhD. in Information Science and Technology from the University of Tokyo in 2010, he joined Keio University Graduate School of Media Design (KMD) and directs KMD Embodied Media Project, where conducts research and social deployment of embodied media that transfer, enhance, and create human experiences with digital technologies. His areas of research expertise include Haptics, Embodied Interaction, Virtual Reality and Telexistence. He also promotes activities on Haptic design, and Superhuman sports, also serves as a project manager of the Cybernetic being project under the Moonshot R&D program.

http://embodiedmedia.org

http://cybernetic-being.org

Projection mapping is a powerful tool to realize the mixed reality covering the whole reality-virtuality continuum. The digital world controllability permeates the physical world through projection mapping that allows users to manipulate the appearance of physical surfaces at will. In this presentation, the speaker will share the computational display techniques that have overcome the technical limitations of projector devices, such as the shallow depth-of-field, which prevented us from reproducing desired appearances on arbitrary physical surfaces. Then, they will discuss the lack of naturalness of projection mapping-based augmentation, e.g., projection mapping works only in a dark environment, and users cannot approach close to the surface due to shadows. Finally, they will introduce their recent attempts to solve these technical problems.

Daisuke Iwai is an Associate Professor at the Graduate School of Engineering Science, Osaka University in Japan. His research interests include augmented reality, projection mapping, and human-computer interaction. He is currently serving as an Associate Editor of IEEE Transactions on Visualization and Computer Graphics (TVCG), and previously served as Program Chairs of International Conference on Artificial Reality and Telexistence (ICAT) (2016, 2017), IEEE International Symposium on Mixed and Augmented Reality (ISMAR) (2021, 2022), and IEEE Conference on Virtual Reality and 3D User Interfaces (VR) (2022). His publications received Best Paper Awards at IEEE VR (2015), IEEE Symposium on 3D User Interfaces (3DUI) (2015), and IEEE ISMAR (2021).